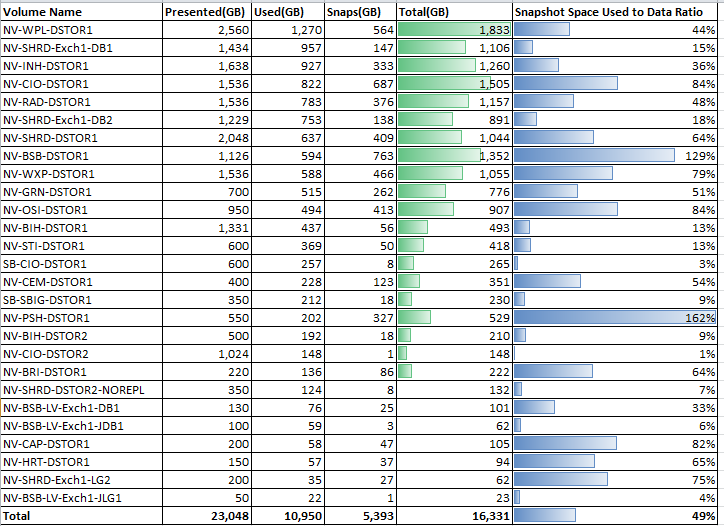

The absence of real data, which is typically shielded by the spinsters in the manufacturer’s marketing department and their grasp on the blogosphere, compels me to provide this information so that a prospective buyer has more than just one dimensional posts from spiceworks and vmware forums to help them in their quest to make the right purchasing decision. The table listed below(see picture) contains the per volume space usage from our Nimble CS460. I wrote a lengthy technical article on switching from Netapp to Nimble a while back so I’ll spare the deep dive into our environment or the technical justification for our decision to use Nimble. However, to provide some information so you don’t have to go to the other article….our SAN environment can be characterized best;

- Every volume runs some number of VM’s(more than 1 less than 10)

- The VM’s on each volume are typically Xenapp, File Servers and sometimes MS-SQL.

- We do have some Exchange Databases and Log Files which have their own volumes.

- Exchange has 1000 Mailboxes

- We run about 100 VM’s Total

The difference in data usage(GB) between the “presented” column and the “used” column is disk space savings. The reclaimed disk space comes from a combination savings delivered by thin provisioning(on the nimble) and compression. Note we do not thin provision VM’s…we zero value them where nimble then see the zeros as free space and does not write those zeroes to disk.

I didn’t provide compression ratios in the table as I think it makes getting valuable insights out of the general trends in this data more difficult however so as not to ignore compression

- As a whole we are seeing about a 30% compression rate.

- The compression rate of any one volume largely depends on the data and applications that reside on the volume

- we are seeing between 10% and 50% compression with little rhyme or reason except that SQL compresses well and exchange does not

- Compression seems slightly less effective than De-duplication except on SQL or very large file shares(NAS).This topic…compression vs duplication… is larger than this post.

Looking at the table we see that our Nimble CS460 is presenting 23TB of data to VMWare. Of that 23TB we are presenting only 11TB is used. On that 11TB of data we have 6 weeks of retention basically broken into a grand-father, father, son scheme, i.e. we have hourlies for a day, then dailies for a week, then weeklies for 6 weeks. Snapshot technology is used to achieve the retention policy. The 11TB of used data has BACKUP BAGGAGE of 5.3TB from the 6 weeks of snapshots. Said another way the 6 week retention schedule we have equals 49% of the used data. The table breaks this out by individual volumes.

One key take away, for those not paying attention to the table, is exchange which has very small snapshot to data used ratios(less than 20%). Intuitively this makes scene as the rate of change(emails in a day) is only a fraction of the total life people keep emails…so as long as your SAN can do efficient snapshots(which Nimble does) then your snapshots will be small relative to the whole database.

We have some volumes that have higher snapshot to data used ratios than they should due to what i believe is mis-configurations somewhere on the servers(or so i think as I don’t know what would cause a ratio of over 100%). I will have to look into those and see why we are having such large data change rates on those volumes.

What to Make of it? To keep the BACKUP BAGGAGE analogy going, nimble packed light for this trip! This is great space efficiency. I can maintain my primary backups for 45 days with a cost that is less than 50% of the total spaced used by the server. I can’t think of anything more efficient…only equally efficient(i.e. Netapp or Compellent(or so i have heard from their sales reps)). None of the software solutions; Veeam, PHD Virtual, Symantec Backup Exec, Acronis etc… can be that efficient. Also when you combine the primary backups taken via snapshots with Nimble’s offsite replication system it is very powerful as my offsite replication doesn’t suffer from hiccups/software issues that make other tools a time suck. If you are looking at SAN solutions for primary backups(snapshots) and offsite backups(SAN to SAN replication) you’ll want to get some real world numbers to ensure it is sufficiently efficient. There is one vendor that starts with an E and ends with an MC that use to have high penalties for leveraging snapshots. I also heard rumors but unconfirmed that another vendor that starts with an E and ends with a logic has horribly inefficient snapshots because the page file(minimum block change size) is so large that the “Snap to data ratio” grows out of control forcing you to use software based backups or avoid long retention policies on the SAN. To keep the BACKUP BAGGAGE analogy going, Nimble represents me on a trip packing light and only with a carry on at most and EMC and EQUALOGIC represent my wife shelling out $100 to check two bags and asking me to put her oversize purse under my airplane seat….and the snacks in my wife’s oversize purse represent the steak dinner offered by the storage sales guys trying to pretend that all the extra baggage is in my best interest.

My key point is…get to know your candidate SAN’s real world numbers for the stats that matter to you…be it Snapshot to Space Used Data Ratio or IOPS or Rack Space Used.

Words of Wisdom(It took me a while to figure this out): All vendors position Snapshots and as backups and offsite replication schemes but under the hood some vendors have snapshots architectures that don’t really enable backup replacement and replication architectures that chew up tons of bandwidth forcing either larger pipes or use of 3rd party backup software.The vendors that do it right have forced the ones that don’t to talk about snapshot technology as if all snapshots are created equal and all efficiency technologies are the same…they are not.