Disclaimer

We are both a NetApp partner and a Nimble Storage partner. The information provided here in no way is implying one product is universally better than another, as we view both as great products. This article is simply meant to provide the rationale for why we decided to pick one over the other for our use-case and scale and specific information related to our environment that can hopefully help someone looking for detailed technical information.

It would be tough to go wrong with either solution as long as you have someone knowledgeable helping you understand your real storage requirements. I am also going to spare you Nimble’s CASL story or NetApp’s WAFL story, as there are many places on the Internet that do a better job than I would at explaining (selling) them.

Summary

By moving from our existing NetApp to Nimble Storage we were able to achieve an ROI compared to keeping our existing NetApp solution. We also were able to achieve additional performance (IOPs) and capacity (GB) benefits by moving to Nimble.

We moved our private cloud environment from our NetApp FAS2040 (36 x 600GB 15K SAS disks and 26 x 1TB SATA disks), which was replicating to a NetApp partner, to a Nimble Storage CS460 replicating to a Nimble partner.

Catalyst for Making a Change

Our Netapp FAS2040 was running out of CPU during deduplication jobs and SnapVault jobs during our backup windows. Part of our problem was that we had a SATA Disk (FC Loop) and SAS Disk (SAS Loop) on the same head, so the performance limitations of the SATA Disk (specifically Write I/O saturating the shared NVRAM in the controller) would cause latency on the SAS Disk. The NetApp solution would have been

- Move the SATA loop to its own head (i.e. moving from an Active/Passive Head Design to an Active/Active Head Design)

- Purchase more SAS disk to offload some of the I/O caused by too many VM’s on SATA.

- We would also be close (maybe 1 year away) to needing to move to a 3xxx-series filer head to handle the increasing CPU workload during deduplication and SnapVault jobs.

Another consideration for making a change was that increasingly more of our customers have been using our private cloud at late hours and have workforces that start in different time zones. These user populations were seeing lower performance due to deduplication jobs, DB verification jobs and SnapVault jobs, which eat up all the FAS2040’s CPU during backup windows. Said another way, we are now truly running 24×7 with our private cloud so the NetApp nightly jobs that have high CPU and I/O requirements needed to be mitigated.

Fundamentally, the NetApp solution (and most other SAN Solutions) to address our growing private cloud environment would be more CPU and more disks. If we hadn’t found Nimble to drive a consolidation ROI, we would have upgraded our Netapp head to a 3xxx-series (more CPU and memory) and added more SAS Disks (more I/O). We also would have evaluated Flash Cache and FlashPool technology, which acts as a read cache.

****See my comments at the bottom of this page reminding people that I am not comparing the 2040 to cs460 and in fact comparing my upgrade path from the 2040 to either a cs460 or NetApp 3 serries*****

Environment

Our environment is entirely built on VMware. The NetApp provides NFS exports to house the VMs. All of our database servers use iSCSI via the Microsoft iSCSI initiator to map the their own DB and log volumes. Those servers also leverage the NetApp SnapManager for SQL/Exchange tools to manage log truncation and database verification. The VMware environment uses NetApp’s VSC tool to provide snapshots of the virtual machines. Snapshots are used for all local backups and replicated off site using SnapVault. It is important to note that Snapvault undeduplicates (“rehydrates”) the data before replicating it. Rehydration provides the ability to have different retention periods on the partner NetApp, but requires more CPU and bandwidth than its cousin SnapMirror. We consider this design (NFS for VMs, iSCSI for Databases, SnapX Software for management) NetApp best practices for an IP-based SAN environment and best practices for providing off-site data archives.

This environment houses about 100 VMs. The largest single VM is an Exchange 2010 server with about 700 mailboxes. I provided screenshots of the Exchange database and logs volume below.

The virtual environment is for our private cloud customers. Each customer is given a file server, a set of Citrix XenApp servers, Exchange mailboxes, and any specific applications servers they need, along with their own private VLAN for security. Our customers don’t tend to have any I/O-intensive workloads and tend to use apps like Office, QuickBooks, and some sort of industry-specific line-of-business application. For internal use, we also host Kaseya, which is a Remote Monitoring and Management tool, as well as Connectwise, which is our ticketing/PSA/CRM system. Both of those applications have modest SQL databases with respect to I/O, with Kaseya being a little more needy.

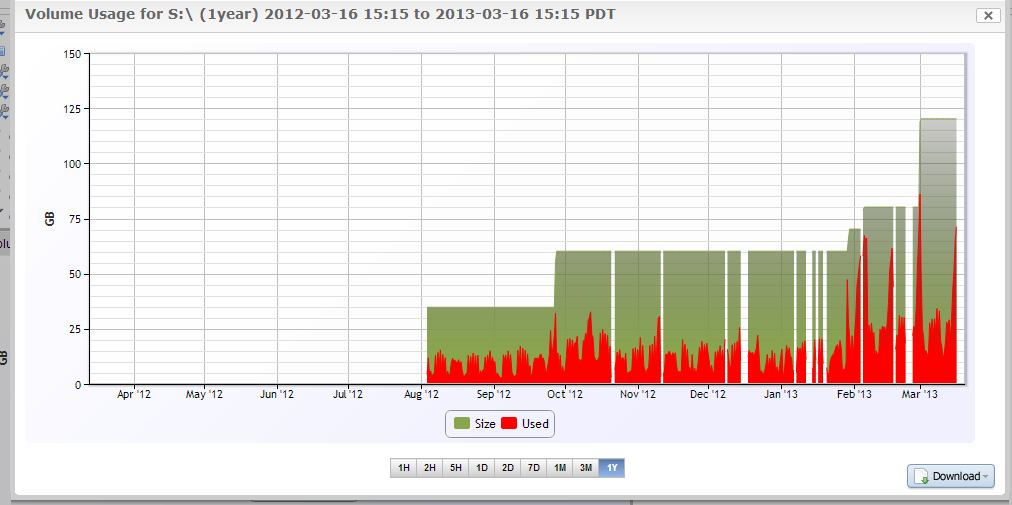

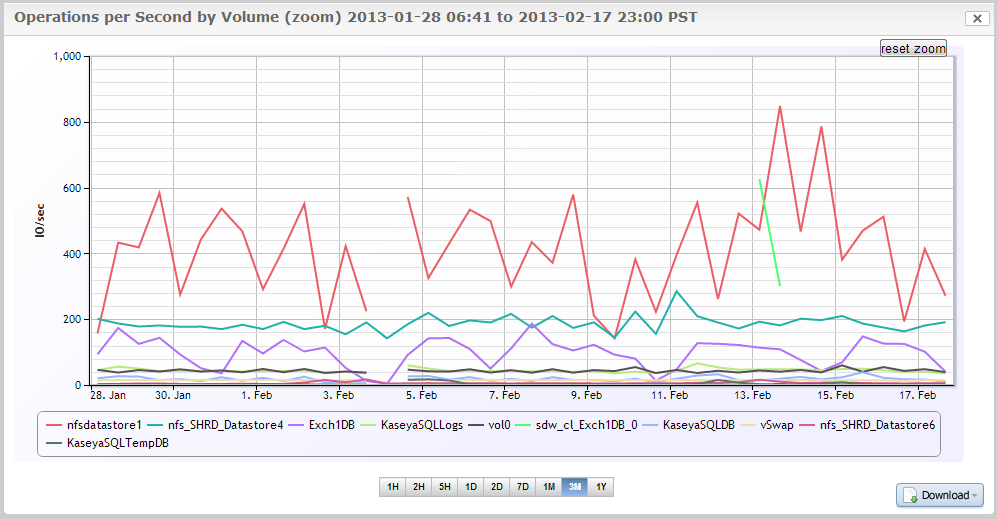

An overview of IOPS per volume for the SAS Aggregate on the NetApp FAS2040 prior to moving to Nimble:

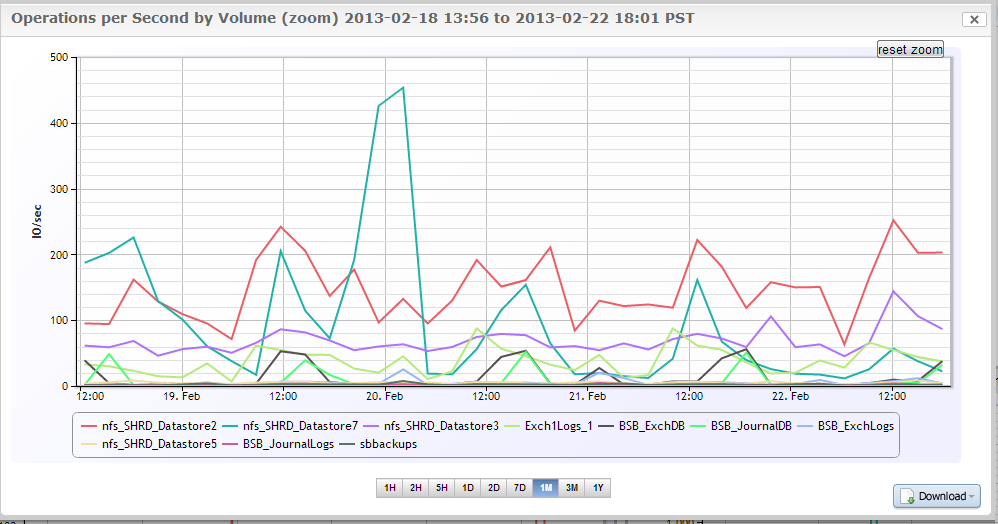

IOPS per volume on our SATA Aggregate on the NetApp FAS2040 prior to moving to Nimble:

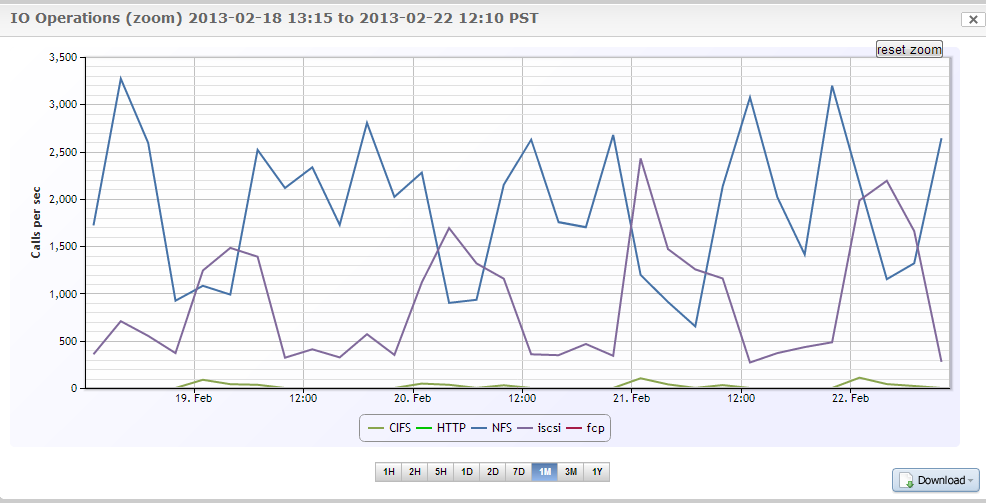

Global IOPS on the NetApp FAS2040 Active Controller prior to moving to Nimble. You see we are averaging about 2,000 IOPS on NFS, peaking to 3,000, and averaging 500 IOPS on iSCSI, peaking to 1,500.

There is a lot of debate about block sizes and read/write ratios when sizing for SANs. This is a huge topic, but for an environment like ours, it is best to assume everything is 4K random and a 50/50 mix of read/write. If you have specialized applications like VDI, or if most of your IOPS are coming from a single database or set of databases, then you will want to understand the application’s needs better for sizing. For general purpose sizing, use 4K random 50/50 and you won’t ever have problems.

Creating an ROI

We pay for space and power in our data center, as well as bandwidth. Had we continued down the NetApp path, we would continued to occupy more space, power and bandwidth as we grew. Nimble allowed us to decrease each of those costs by a factor of at least 2x. The space and power are fairly obvious in how Nimble can help save (we went from 15U of NetApp gear to 3U of Nimble with the ability to double our VM density without adding U’s).

The bandwidth savings is a little tricky in that you need to understand how NetApp handles space efficiency to understand why nimble replication is more efficient. My CTO would be better at explaining this, but essentially if you use SnapVault, which is a requirement if you need immutable backups, then SnapVault will actually “rehydrates” the deduplicated data when it replicates. Because of this, the receiving NetApp essentially gets to build its own file table and have its own file level checksums in WAFL making it, an “immutable” backup. If you want to replicate the deduplicated data with NetApp, you need to SnapMirror, which is more like an image-based replication and has dependencies with the primary NetApp. The key risk is that if someone deletes a snapshot on the primary, it will also disappear from the secondary. Said another way, to have a true SAN-to-SAN backup with different retention periods, you need SnapVault, not SnapMirror. By design, SnapVault doesn’t allow you to have save on bandwidth during replication. Nimble’s replication uses its compression engine so that all replicated data is compressed yet the result is more like a SnapVault and can be used for an official offsite backup.

Nimble Storage has been amazing so far

First thing to note: Nimble does what they say it will do. See the screenshots below that show 160,000 read IOPS (4K sequential 100% read) and 40,000 write IOPS (4K random). This isn’t a great real world test so please don’t think this implies that you will see these numbers in the real world, but it does prove out some of their technology designs.

CASL can turn random writes in to sequential writes very effectively. Nimble says their units are bottlenecked by CPU, not disk, and that your SAS backplane will be saturated before the the sequential write ability of the SATA disks is the limiting factor. My top technical engineer confirmed this, saying that you if take the aggregate sequential write performance of the 11 disks, you will saturate the 6Gbs SAS backplane before the SATA disks themselves limit you (assuming CASL can actually make everything look like sequential writes). If you assume that CASL works as advertised, and our results suggest it does, then it becomes all about the reads and cache hits.

This screenshot shows the 120,000 read IOPS and the corresponding throughput as well as the 40,000 write IOPs and the corresponding throughput. A take away here is that with small block (4K) I/O, throughput is not at all a limiting factor as 120,000 IOPS at 4K takes up only about 1Gbps of throughput. For all those looking to move to 10Gbps, you might want think again unless you really know you will need it. The throughput spikes in the graph are from large block tests, which push much fewer IOPS of course. I don’t have the details on the settings of those large block tests listed here. I could post them if people want them.

Nimble’s story is all about the cache hits combined with CASL writes. If you can soak up 90% of the reads with cache then CASL with NVRAM can make writes super fast and sustainable. That’s really the magic of the whole system. I would argue that for any generalized workloads you will easily achieve 80%-90% cache hits. If you don’t know if you have a specialized or generalized workload then you either have a generalized workload or they hired the wrong guy to do the SAN research. I will give you an example of a workload that won’t work as well in Nimble, or more specifically, that will make Nimble perform like a more traditional SAN. If you have an application that writes lots of data sequentially then Nimble will not put the data in flash upon write. When you run a program like a report that requires the data, it will come from disk first, making it slow (normal SAN speeds really) the first time you run the report. If that report is never run again, then you never get the benefit of flash, and your read cache hits for that workload will be too low to speed up though cache. The end user experience would be closer to a traditional SAN. For our environments and most of our customers this is not an issue. To clarify this point further, you need to understand the average latency metric vs lowest latency metric. If you want to absolutely maximize performance you want to minimize latency (always) and performance will be dictated by the highest outlier. Technologies like Violin Memory and the other all flash players minimize latency deviations and hence guarantee the best possible performance — always. Nimble focuses on average latency as most general workloads don’t have noticeable user impacts with a couple of outliers.

Management of the Nimble

Thus far management has been straight-forward and simple. We have many more volumes now since we aren’t trying to maximize the number of VMs in a single volume for deduplication. Maximizing the number of “like” files on a given volume is how you get efficient deduplication on the NetApp. I did really love the concept of large NFS volumes rather than lots of smaller iSCSI volumes. Volume creation is done on the Nimble Web GUI although Nimble does have a VMware plugin. When compared to NetApp, the plugin is pretty lame and needs work. The SNMP support is very basic and only works on a global level rather than a per-volume level at this point. The web GUI does provide good data that traditional SNMP monitors tools would; we would obviously prefer to get the data into our single pane of glass (LogicMonitor).

Reliability and Redundancy

It is early to claim victory for us, but so far so good. My technical team tested all the controller failover and RAID rebuilds scenarios that we would expect to experience eventually. All worked as advertised. I heard that if two disks fail at the same time that the system shuts downs to avoid potential data loss. I don’t like it on the surface, but it is actually a smart design decision. I can only have 1 hot spare per shelf, which is a weakness, but that’s probably the right ratio for the number of disks on a shelf. Nimble took away the RAID group/RAID type design, hot spare choices and tiering design that we have traditionally had to think about. For most people this is actually a good thing. There are probably some workloads where this is limiting but I bet of the actual people that complain that this set of choices was taken from them, only 10% have a justifiable point. If you understand CASL and cache hits, then you also understand why it doesn’t matter for Nimble as there are no advantages to other RAID types for Nimble.

Networking

With Nimble Storage we have to use MPIO rather than LACP to achieve networking redundancy. Given VMware’s new ability to support LACP this seems like a downgrade rather than an upgrade. However MPIO does have some advantages in that it can be a little smarter than LACP in terms of its packet distribution algorithms. MPIO basically makes it so that application layer is aware of the redundant paths and makes smart decisions about what path to send the data on. LACP hides the details from the application layer in the switching/networking layer. Given the option, I would rather do LACP, because with MPIO, we have to install the MPIO driver on each hypervisor and any guest that will have iSCSI volumes using the Microsoft iSCSI initiator (e.g. Exchange). It hasn’t been a problem yet, and MPIO is so well understood in the industry that its probably not a huge deal for most environments.

Compression

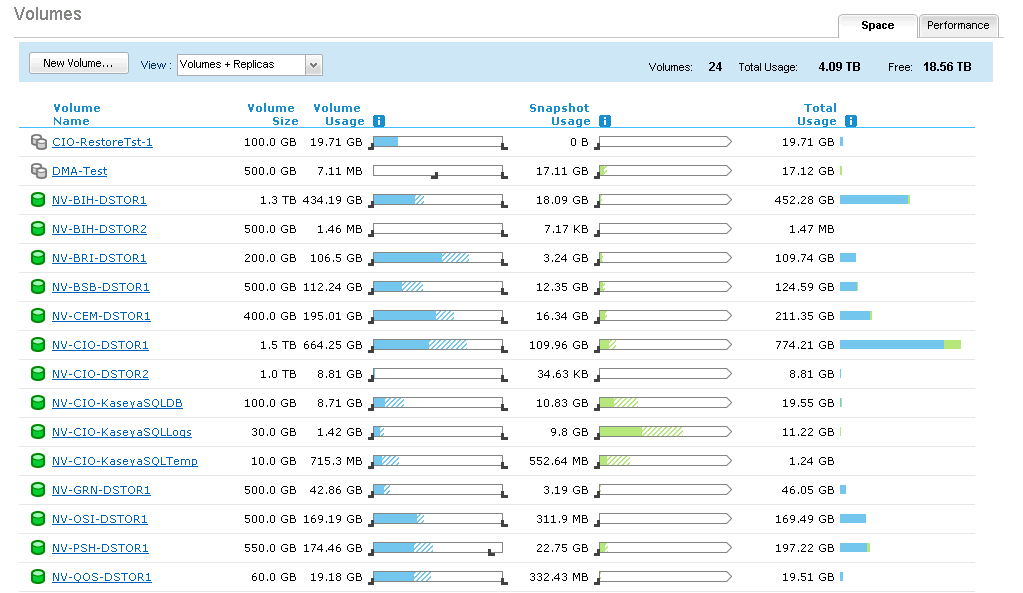

We see 30% compression, not 2X. File servers don’t tend to compress well, nor do Exchange 2010 mailboxes. I don’t know who is seeing 2X compression, which is what Nimble Storage always advertises, unless there only SQL databases on the SAN. Nimble Sales staff seems to have pulled back the 2X push and now they say 30-50%. See screenshot of some of our volumes and their compression ratios. In the NetApp world, we would see about 40-50% deduplication, so from an efficiency perspective, compression doesn’t seem to be as good as deduplication for our VMware and Exchange workloads.

What we miss from NetApp

The NFS/VMware story on NetApp is so powerful, and yet so simple. NFS with VMware really is a more eloquent storage solution than iSCSI. I thought I might change my mind after more time using Nimble Storage, but I am still convinced that for ease of use and management, NFS is the king for VMware environments. However, the technical advantages with iSCSI compared to NFS are pretty even provided the SAN has good VAAI implementations, but the simplicity of NFS still is missed by us. NetApp software (SnapManager for VI/SQL/Exchange) is like 10+ generations ahead of Nimble’s offering. Nimble has a VMware plugin software the same way that IT has robust applications for automating all their tasks… we call those robust applications scripts. NetApp has great SNMP/API/PowerShell support that allow 3rd party tools like LogicMonitor to really help you get monitoring data out of it. Nimble has pretty good web interface that provides similar info, but having to use the web GUI kills the single-pane-of-glass story that we are trying to achieve with our monitoring tools.

Most of the things that we miss on the NetApp are management conveniences, and at this point, they really do seem only marginally beneficial for our environment.

We have sold and installed numerous NetApps over the years, including our own, and have never had a controller failure. This is a great track record, and anytime you bring in a new vendor that uses SuperMicro hardware, you worry if they can match that same track record. This is probably my biggest worry. SuperMicro hardware has failed for our customers, and I just have the impression that it won’t be as reliable as what we have seen from NetApp over the past 10 years working with them.

What we found that we didn’t miss

NetApp WAFL/Snapshots/Deduplication really set the bar for the whole storage efficiency story, not to mention the unified storage story. However to optimize a NetApp environment, you really have to have a deep technical understanding of what is happening under the hood on the SAN (or be really good at following best practices white papers). For example, to maximize deduplication, you need to know that you want as many similar OS build VMs in the same volume. However to minimize CPU load during deduplication or SnapVaulting jobs, you want smaller volumes. Also, you want deduplication jobs to always happen before snapshots… or is it after? We have customers with 200 VMs and 30 SQL databases as well as NFS for Unix and CIFS for Windows file shares all on the same NetApp. Complex environments like that require NetApp and a high-level operator and the price is justified, but for our environment it isn’t needed. The unified storage story is essentially still dominated by NetApp, but the need for unified storage in a pure virtual world is not justified for a lot of environments.

With NetApp, there was a certain level of complexity that we were juggling in our head (and we were good at it) but now that we have inline compression, the complexity of the CPU/snapshot/deduplication/SnapVault relationships/SATA Agg vs SAS Agg have been removed. It’s a clean feeling allowing our top technical talent to focus on other problems such as managing NetApps in our customer environments, where those levels of complexity are required.

***I want to be clear: eloquent and complexity not always opposites. NetApp’s architecture is the most eloquent for what it does well (multiprotocol), however the nature of multiprotocol, combined with powerful technologies like snapshot, SnapVault, and deduplication make it more complex even though it is still quite eloquent***

Alternatives

We evaluated many different alternatives ,although to be fair we didn’t bring all the units in for testing. Being a VAR, we have a lot of emotional baggage that prevents us from being unbiased. Here is my “unscientific” view:

Nimble vs Violin Memory – Violin is great, if I needed 1 million IOPS out of a 3U device and all my customers were running Oracle DBs. Violin Memory’s target market is not our environment. They have a great story for real high performance environments, but that’s not us.

Nimble vs Fusion IO Cards – I don’t really understand this model for generalized workloads so I stayed away. Distribute PCI Flash to every server and then what?

Nimble vs EMC – Not a fan, probably because we are NetApp Partners. VNX is not as eloquent as NetApp or Nimble, but even if it was, I would enjoy allowing my NetApp partner bias to cloud my judgment.

Nimble vs Tintri – Couldn’t wrap my head around why I would want VMware-only capable storage so we didn’t speak with them. I think there is a big difference between new start-up and new start-up with new paradigm shift. Tintri seems to be the latter, and from a risk perspective (risk to the buyer), seems to have the worst of both sides and only marginal upside.

Nimble vs Nutanix – Nutanix is such a big paradigm shift in storage that I couldn’t bet the house on it. Lots of promise on this company, I just don’t want to be so quick to jump on the proprietary solution (server and SAN) on commoditized hardware that can only be purchased through them, making both my server and SAN purchases proprietary? If I was looking at a fixed VDI workload of say 1,000 desktops, I would check out this company, although I also really like Nimble’s VDI story.

Nimble vs EqualLogic – I have some trusted (business) contacts that successfully run this platform; typically they are the most price-conscious and not the most value-conscious or technical. I think the solution is mediocre. The price is great, but I don’t trust Dell’s ability to innovate, maybe once they are private they will change this. The big technical issue I always hear about EqualLogic is that they have 16MB page file size, so that if a single 4K block changes, the whole page is marked for replication to its partner as well as captured in the snapshot. So if your deltas are 1GB worth of 4K blocks a day, the actual space for snapshots and bandwidth used for replication could be 10 or 20X that. If this is true, it would kill us on bandwidth and extra storage for snapshots. I think the people using EqualLogic use Veeam or PHDVirtual or some non-SAN-based backup solution to backup their environment because of this limitation.

Nimble vs Compellent – My top technical guy hates the tiering concept, as he sees it fail all the time when dumb sales rep under-provision the top tier in order to win the deal. I could overlook this and size the top tier correctly, but when you combine it with a confusing Dell storage story, I would prefer to pass. Also, even though this is anecdotal, I haven’t seen too many Compellent customers that go through a refresh, say five years later, that aren’t shopping the hell out of them. Our NetApp customers seldom shop when a refresh is due, as they typically have been very happy with the product and the service. If they do, its just to price check and they don’t end up switching unless the price is way too high. Of course I would only see the ones shopping, not the ones who are happy, so I recognize the bias.

Nimble vs Hitachi Data Systems (HDS) – I don’t know much about them but the people in my circles have never mentioned anything good or bad, so I passed. I have seen them at some conferences and understand they have a good product. However, I only have so much time in the day for research and testing… sorry Hitachi.

Nimble vs HP 3Par – This is an interesting solution, but I am told its strength is for scale-out, which isn’t our environment. I love the scale-out model in theory, but in practice, upgrading the heads regularly with Moore’s law on our side seems more practical. If they weren’t owned by HP, I probably would have looked deeper to validate my thoughts, but HP (like Dell) also has a disjointed storage story.

Nimble vs HP Lefthand – This product sort of reminds me of EqualLogic in that people who buy it never rave about it and the technology elite never mention it. That, combined with HP’s diluted storage story, made me keep away.

Nimble vs ZFS Players – There seems to be a lot of ZFS players around. I was cautioned by a trusted source that no one officially supports ZFS except Oracle. All the others are using a forked version of ZFS before Oracle closed the open source door. This means that ZFS on non-Oracle platforms is “open source supported” by some tiny company that didn’t actually create ZFS trying to make “value add” modifications. I understand that Red Hat successfully did this “open source value add” model but that doesn’t mean I would have bought into Red Hat when they were a 40-person start-up company. I trust open source and SAN the same way I trust virtualization and white box Servers. For those that successfully running this type of configuration, please spare me the comments as you lose instant credibility unless your reasoning is “we did it because it was cheap”.

Nimble vs Palo Alto Networks – This is just a plug..Palo Alto Networks doesn’t do SAN at all, but they have the best firewall on the market (technically a next generation firewall). Nobody who uses one then has to go back to other firewalls is ever happy again.

————————————————————————————————————

I recieved some feedback and questions as to why we didn’t use NetApp FAS2240 with FlashPool or a NetApp 3xxx-series with FlashCache. We looked at those options, as well but the challenge is with the write speeds. If I want to do high density SATA disk with FlashPool or FlashCache, then I am limited by my write IOPS with WAFL’s ability to write to SATA. With a 3xxx-series and FlashCache, I would also need to take up the extra Us for the heads as the 3xxx-series is a dedicated chassis with no space for disks.

The FAS2240 doesn’t doesn’t do FlashCache and limits me to FlashPool technology. We did seriously considered the FAS2240 with 6 SSD (FlashPool) and 18x 2TB SATA disks, but ultimately were not convinced that it could handle the write IOPS needed, nor were we convinced that NetApp’s flash/high density story has been a focus for them. We see NetApp focusing on cluster mode (ONTAP C-Mode), which is great for some environments, just not ours.

The other response is our NetApp FAS2040 is only 2 years old, so any solution that would stay NetApp would need to figure out how to leverage that investment, not to mention that the current FAS2040 actually has 12 disks inside the heads unit but can’t be converted into a shelf, which makes the upgrade path much more difficult.

—————————————————————————————————

We continue to recieved feedback that this isn’t an apple’s to apple’s comparision. I.E. a 2040 to a cs460. In case this wasn’t made clear….the 2040 was the platform we were on. The cs460 was the next generation for the platform we needed. I see it closer to the Netapp 3 serries which was our other realistic option. Even though the solution we were moving from was the low end of netapp our netapp upgrade path based on data(customer) growth was putting us into the 3 serries. Both possible Netapp Solutions for use can make good use of flash, the 2240 can use flashpool and the 3 serries can use flashcache. There were two fundamental problems with Netapp’s solution for us

1.) NetApp still does not like you to design their systems with SATA if the workload is going to be write intensive. This means to get the 25TB of usable space that the cs460 provided I will need way more space and power as have a higher price point. Once I know my disk requires then i can pick out my head requirements which would either be a 2240 with flashpool or a 3 serries head with flashcache. If I decide on the 3 serries and I want failover to work on the 3 serries I need to buy flashcache for both heads. However now I can’t run active/passive…unless i want one of the flashcache to sit idle….so i need to have an active/active design. The active/active design eats up more disks as now I have two different raid groups with dual disk failure(at scale it is a moot point but initially it matters). The active/active design is solid but it drives up costs especially given that i can’t get the write performance with SATA and have to use SAS. Big picture no ROI just an expense.

2.) The solution described above doesn’t solve space, power or bandwidth. To lower my bandwidth replication costs with netapp i would need to snapmirror accross the WAN rather than snapvault…this would enable deduplicated data to be replicated rather than rehyrdrated data. However snapmirror isn’t an offical backup so then I would need to snapvault the mirror. This is a costly design.

If there are flaws in my logic please update me.

———————————————————————————————————————

I had my CTO chime in as well. Here are his thoughts

We could add some of the operations pros/cons:

1) Things we don’t miss:

- The inconsistencies in scheduling snapshots. Sometimes you use the NetApp scheduler, sometimes a Windows Scheduler. The Nimble scheduler is well thought out, and easy to use in the GUI. It is great to use the scheduler built into the device for all backup jobs, and not having to resort to the Windows Scheduler (or a Front End) for application consistent snapshots.

2) Things we are not sure if we miss:

- The GUI tools for extending a drive from Windows. The SnapDrive GUI really is great, and insulates Windows Administrators from the storage details. Otherwise, the Windows Admins have to have logins to the Nimble.

- The ability to do an automatic database consistency check post snapshot, to ensure the backup is correct. And the ability to do it on a different machine If necessary.

3) Things we do miss:

- Multiple accounts, and Role-based access.

- A fully supported PowerShell interface. We had several scripts for making volumes, and, checking for status on replicas.